regularization machine learning mastery

I have tried my best to incorporate all the Whys and Hows. Sometimes one resource is not enough to get you a good understanding of a concept.

Start Here With Machine Learning

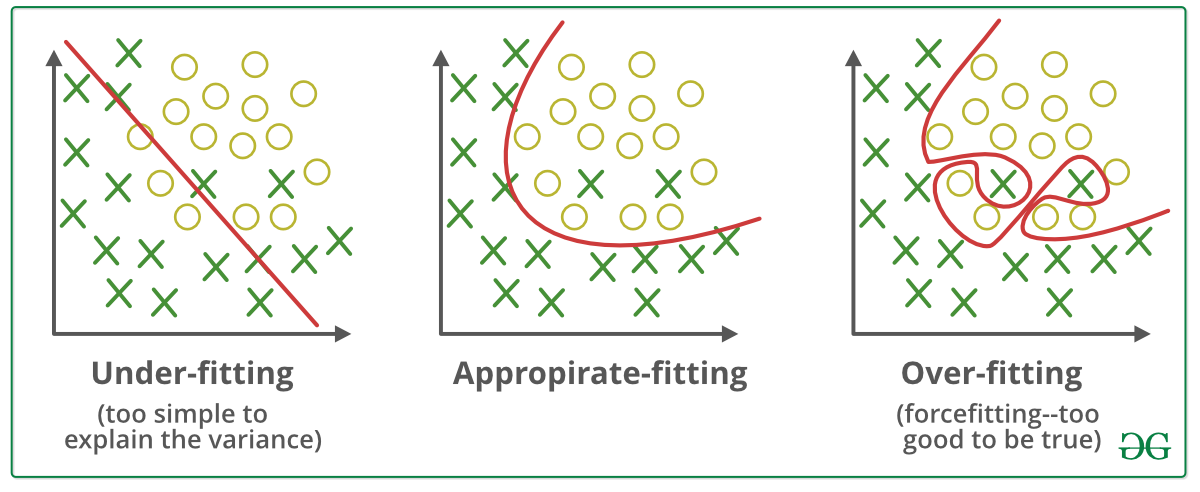

In other words this technique forces us not to learn a more complex or flexible model to avoid the problem of.

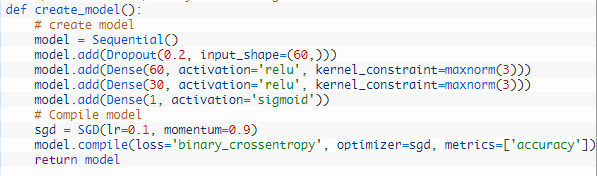

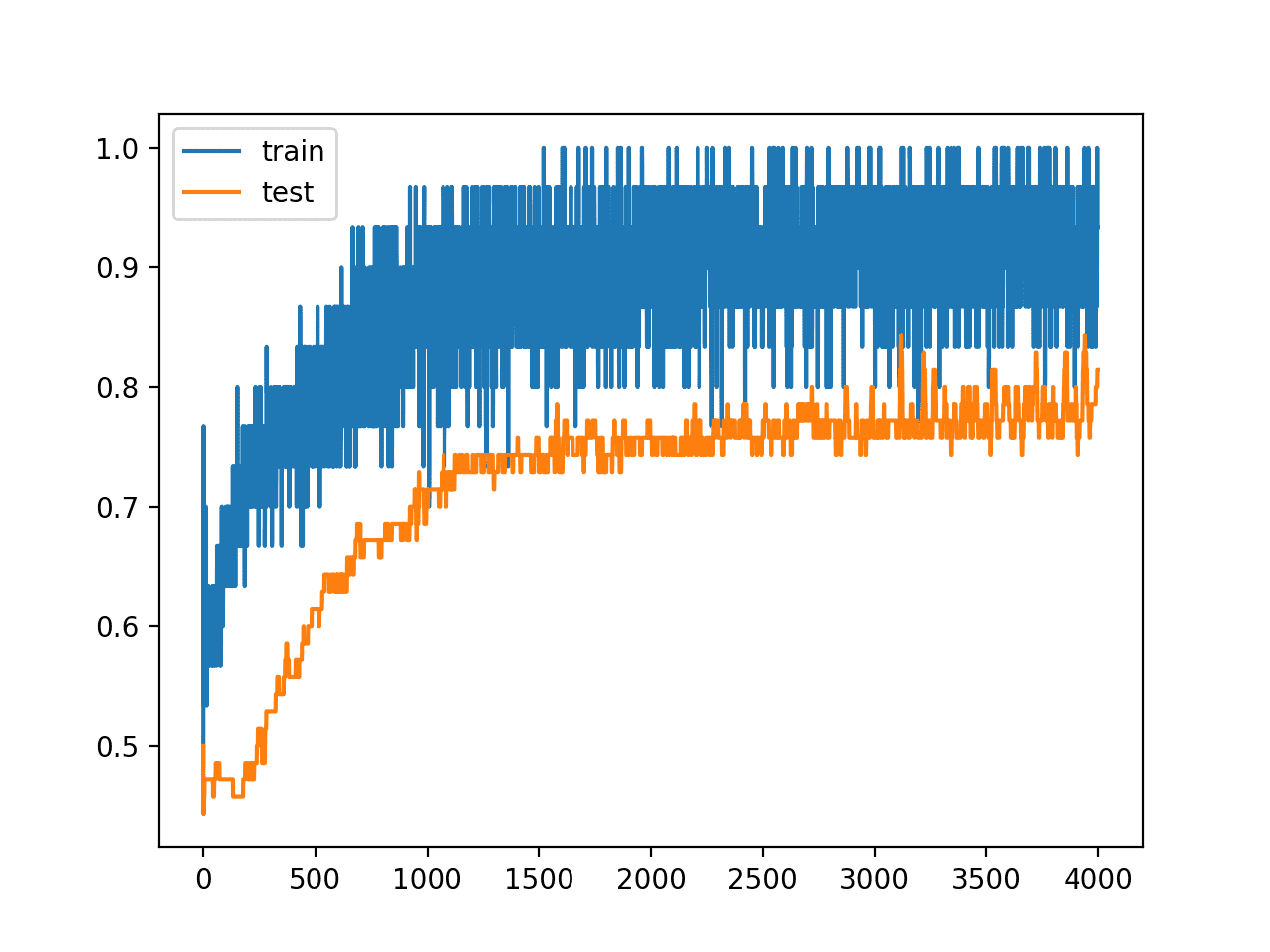

. Make your Machine Learning Algorithms Learn not Memorize Within the production pipeline we want our machine learning applications to perform well on unseen data. A simple and powerful regularization technique for neural networks and deep learning models is dropout. Deep learning neural networks are likely to quickly overfit a training dataset with few examples.

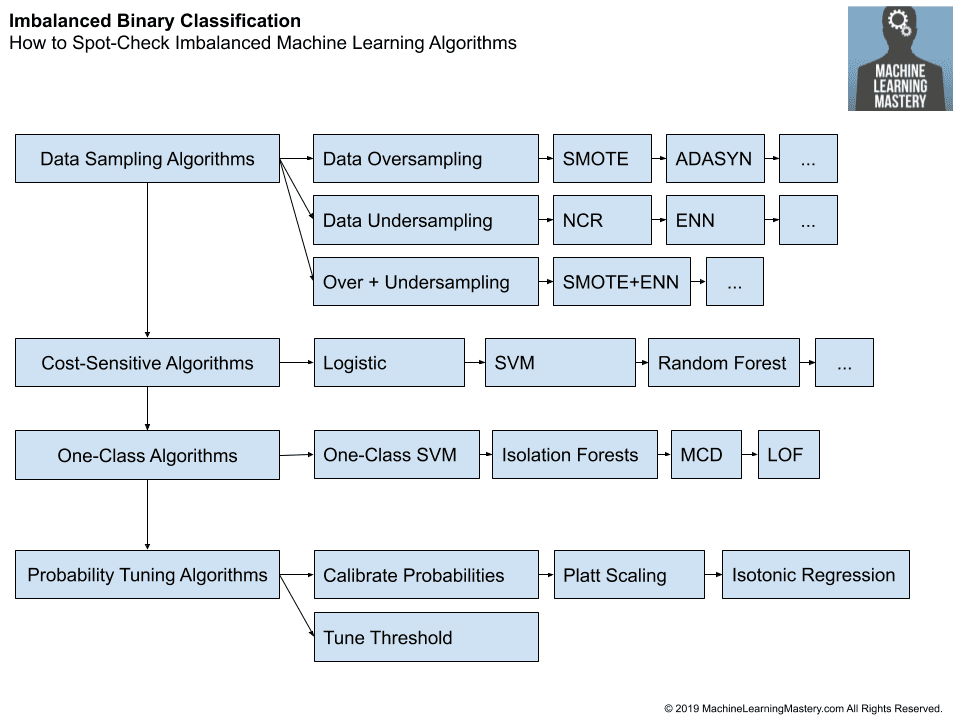

A single model can be used to simulate having a large number of different. In this post lets go over some of the regularization techniques widely used and the key difference between those. Regularization is that the method of adding data so as to resolve an ill-posed drawback or to forestall overfitting.

The following article provides an outline for Regularization Machine Learning. It doesnt really matter how well an ML application performs on training data if it cannot deliver accurate results on test data. Ensembles of neural networks with different model configurations are known to reduce overfitting but require the additional computational expense of training and maintaining multiple models.

If the model is Logistic Regression then the loss is. Below is a regularization library I highly recommend go on play with it -. In order to create less complex parsimonious model when you have a large number of features in your dataset some.

Also it enhances the performance of models. Optimization function Loss Regularization term. It is very important to understand regularization to train a good model.

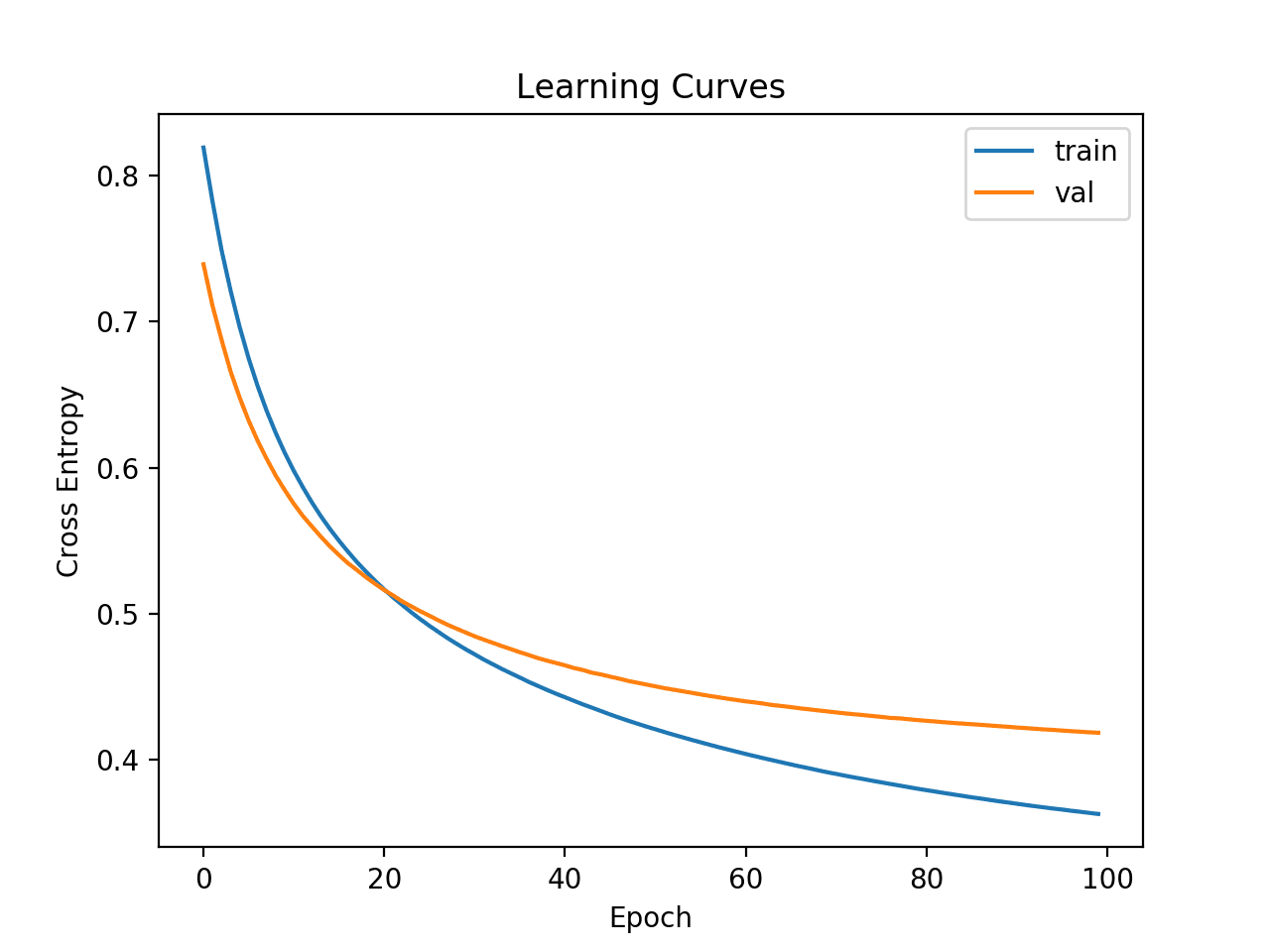

Sometimes the machine learning model performs well with the training data but does not perform well with the test data. Regularization is used in machine learning as a solution to overfitting by reducing the variance of the ML model under consideration. Gradient Descent Overfitting is a phenomenon that occurs when a Machine Learning model is constraint to training set and not able to perform well on unseen data.

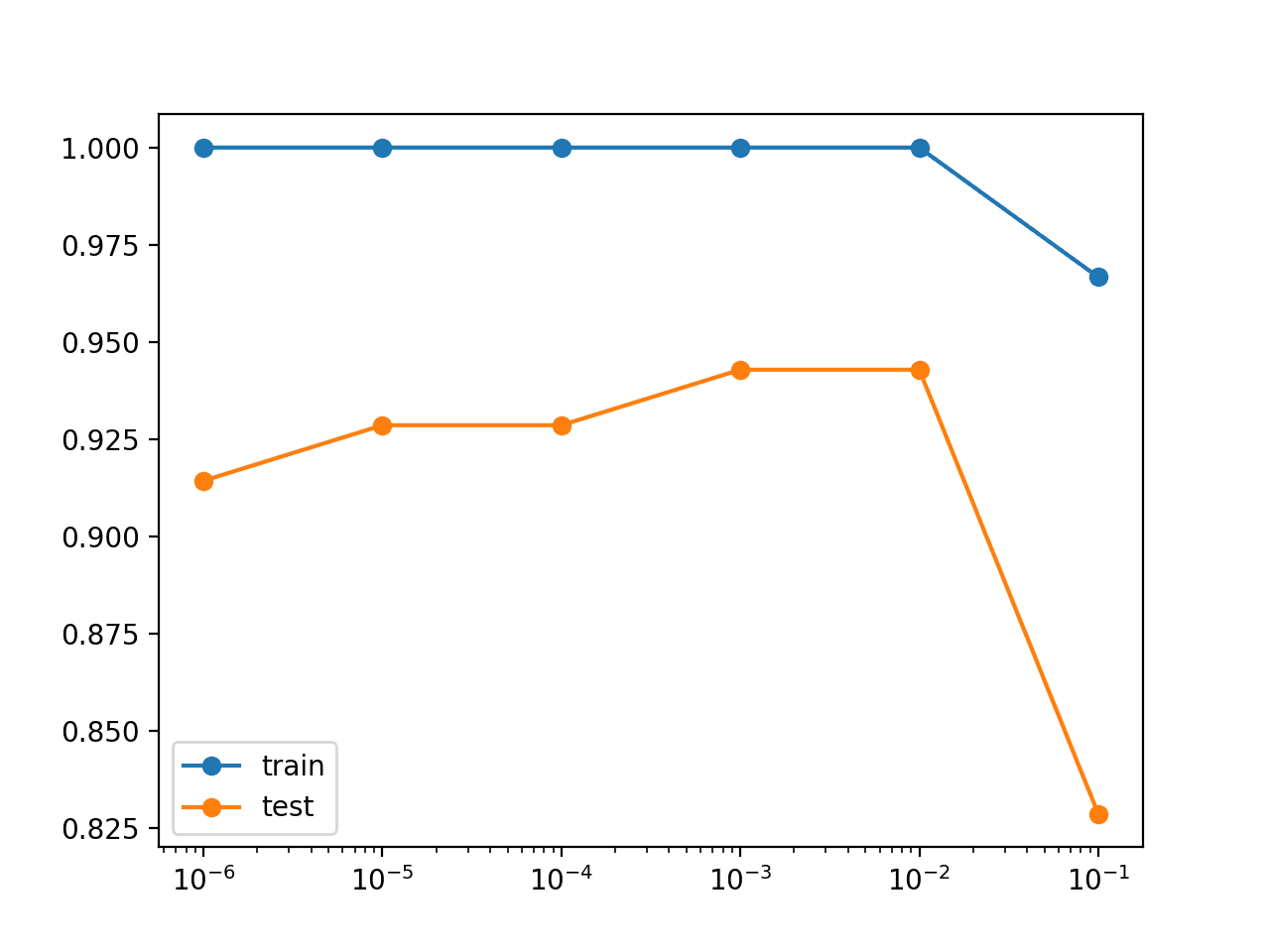

Regularization can be implemented in multiple ways by either modifying the loss function sampling method or the training approach itself. In my last post I covered the introduction to Regularization in supervised learning models. Activity or representation regularization provides a technique to encourage the learned representations the output or activation of the hidden layer or layers of the network to stay small and sparse.

This is a form of regression that constrains regularizes or shrinks the coefficient estimates towards zero. Regularization is must for a model where noise is involved and your first predictor is less than 9598. L2 regularization or Ridge Regression.

Data scientists typically use regularization in machine learning to tune their models in the training process. Concept of regularization. In this post you will discover activation regularization as a technique to improve the generalization of learned features in neural networks.

Moving on with this article on Regularization in Machine Learning. You can refer to this playlist on Youtube for any queries regarding the math behind the concepts in Machine Learning. How the dropout regularization technique works.

In simple words regularization discourages learning a more complex or flexible model to prevent overfitting. In the context of machine learning regularization is the process which regularizes or shrinks the coefficients towards zero. It is a form of regression that shrinks the coefficient estimates towards zero.

Setting up a machine-learning model is not just about feeding the data. I have learnt regularization from different sources and I feel learning from different. It is a technique to prevent the model from overfitting by adding extra information to it.

Regularization is the most used technique to penalize complex models in machine learning it is deployed for reducing overfitting or contracting generalization errors by putting network weights small. Regularized cost function and Gradient Descent. Regularization in Machine Learning What is Regularization.

Equation of general learning model. Regularization is a technique used to reduce the errors by fitting the function appropriately on the given training set and avoid overfitting. A simple relation for linear regression looks like this.

It is one of the most important concepts of machine learning. So the systems are programmed to learn and improve from experience automatically. Introduction to Regularization Machine Learning.

This technique prevents the model from overfitting by adding extra information to it. In other words this technique discourages learning a more complex or flexible model so as to avoid the risk of overfitting. Let us understand this concept in detail.

How to use dropout on your input layers. Hello reader This blogpost will deal with the profound understanding of the regularization techniques. Using cross-validation to determine the regularization coefficient.

It applies to objective functions in ill-posed improvement issues. Regularization in Machine Learning is an important concept and it solves the overfitting problem. An important concept in Machine Learning.

Regularization is one of the most important concepts of machine learning. By Data Science Team 2 years ago. L1 regularization or Lasso Regression.

It is not a complicated technique and it simplifies the machine learning process. Regularization is essential in machine and deep learning. It means the model is not able to.

In this post you will discover the dropout regularization technique and how to apply it to your models in Python with Keras. The cheat sheet below summarizes different regularization methods. After reading this post you will know.

When you are training your model through machine learning with the help of. Regularization is one of the basic and most important concept in the world of Machine Learning. Machine learning involves equipping computers to perform specific tasks without explicit instructions.

Regularization In Machine Learning And Deep Learning By Amod Kolwalkar Analytics Vidhya Medium

Weight Regularization With Lstm Networks For Time Series Forecasting

Machine Learning Mastery With R Get Started Build Accurate Models And Work Through Projects Step By Step Pdf Machine Learning Cross Validation Statistics

Day 3 Overfitting Regularization Dropout Pretrained Models Word Embedding Deep Learning With R

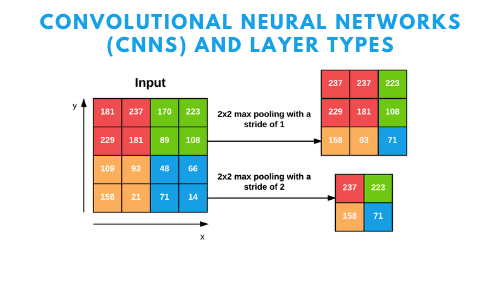

Convolutional Neural Networks Cnns And Layer Types Pyimagesearch

Machine Learning Algorithm Ai Ml Analytics

![]()

Machine Learning Mastery Workshop Enthought Inc

Regularisation Techniques In Machine Learning And Deep Learning By Saurabh Singh Analytics Vidhya Medium

Essential Cheat Sheets For Machine Learning Python And Maths 2018 Updated Favouriteblog Com

Various Regularization Techniques In Neural Networks Teksands

Tensorflow 2 Tutorial Get Started In Deep Learning With Tf Keras

Jason Brownlee Deep Learning With Python Store 54 Off Www Pegasusaerogroup Com

Day 3 Overfitting Regularization Dropout Pretrained Models Word Embedding Deep Learning With R

Linear Regression For Machine Learning

A Tour Of Machine Learning Algorithms

What Is Regularization In Machine Learning

Github Dansuh17 Deep Learning Roadmap My Own Deep Learning Mastery Roadmap

A Gentle Introduction To Dropout For Regularizing Deep Neural Networks